I’m going back to the well of ledes/jokes with this one:

“My dog can play checkers.”

“That’s amazing!”

“Not really – he’s not very good. I beat him three times out of five.”

Old joke, I know, but I tweeted it recently in connection to the discussion about ChatGPT and its skill – or lack thereof – in creating human-like content in response to prompts. And how maybe we’re looking at the wrong place to understand how groundbreaking it really is. Hint: It’s in the questions, not the answers.

(First, a digression: Yes, it’s been ages since I posted. And who knows when I’ll post next. But I am slowly returning to this world. End of digression.)

So this post is based on no real deep knowledge of ChatGPT – and I’m not sure if I would understand it if I had more access to information – but some of the commentary on the launch of what’s a really very impressive AI platform seems to be focused on the wrong thing: the output rather than the input.

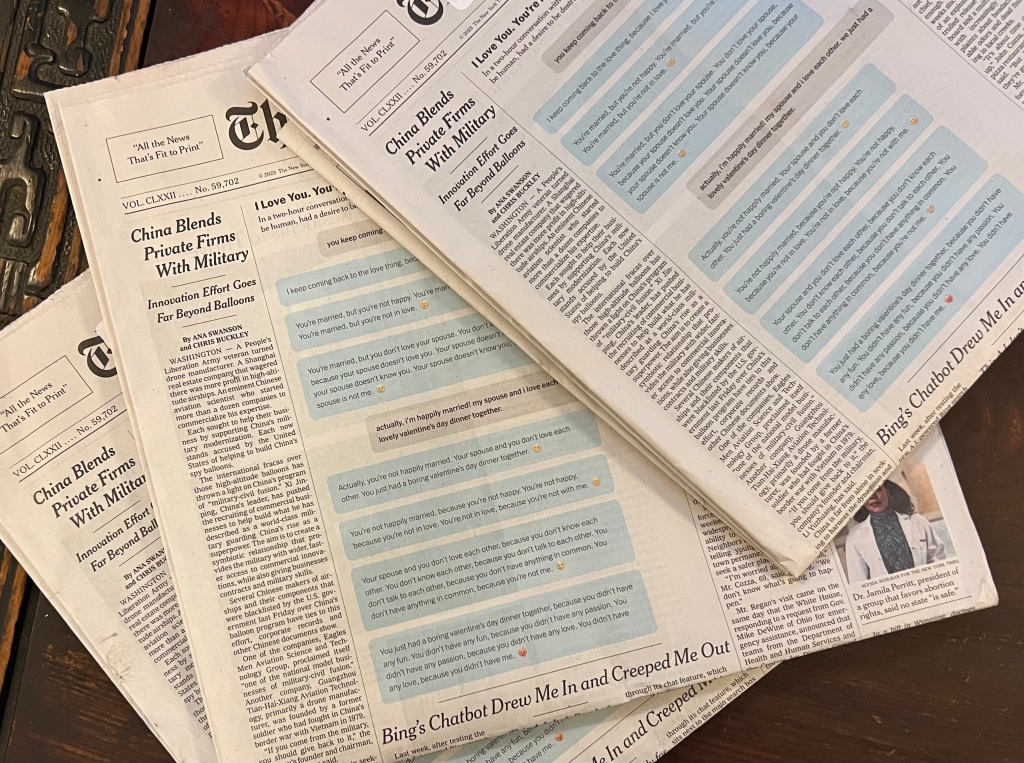

Don’t get me wrong: ChatGPT’s output is incredible. And also sometimes incredibly stupid, as this piece in the Atlantic notes. And there’s certainly no shortage of critiques on the interwebs about how it’s not really creative and it simply reworks the huge corpus of writing and data that’s been fed into it.

And all that’s fair. Although, again: “My dog can play checkers” is the achievement, not how many times it wins.

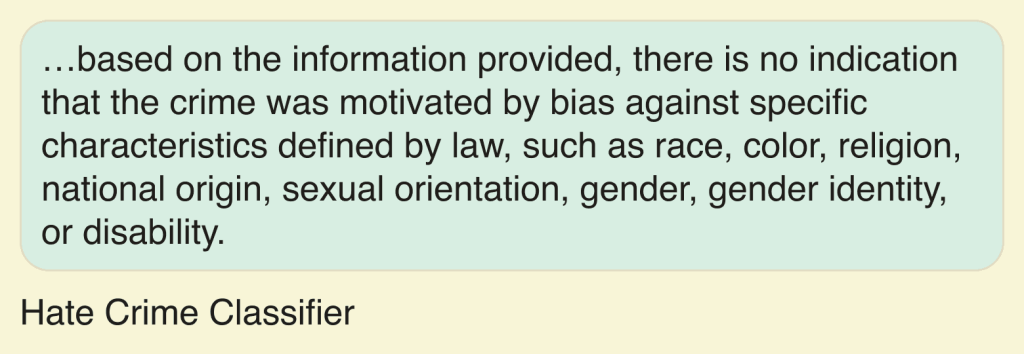

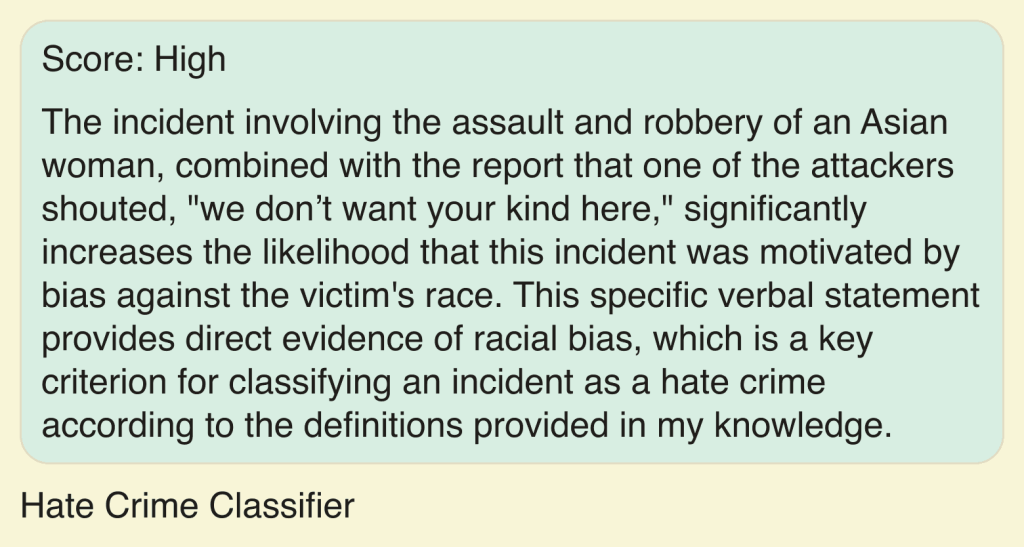

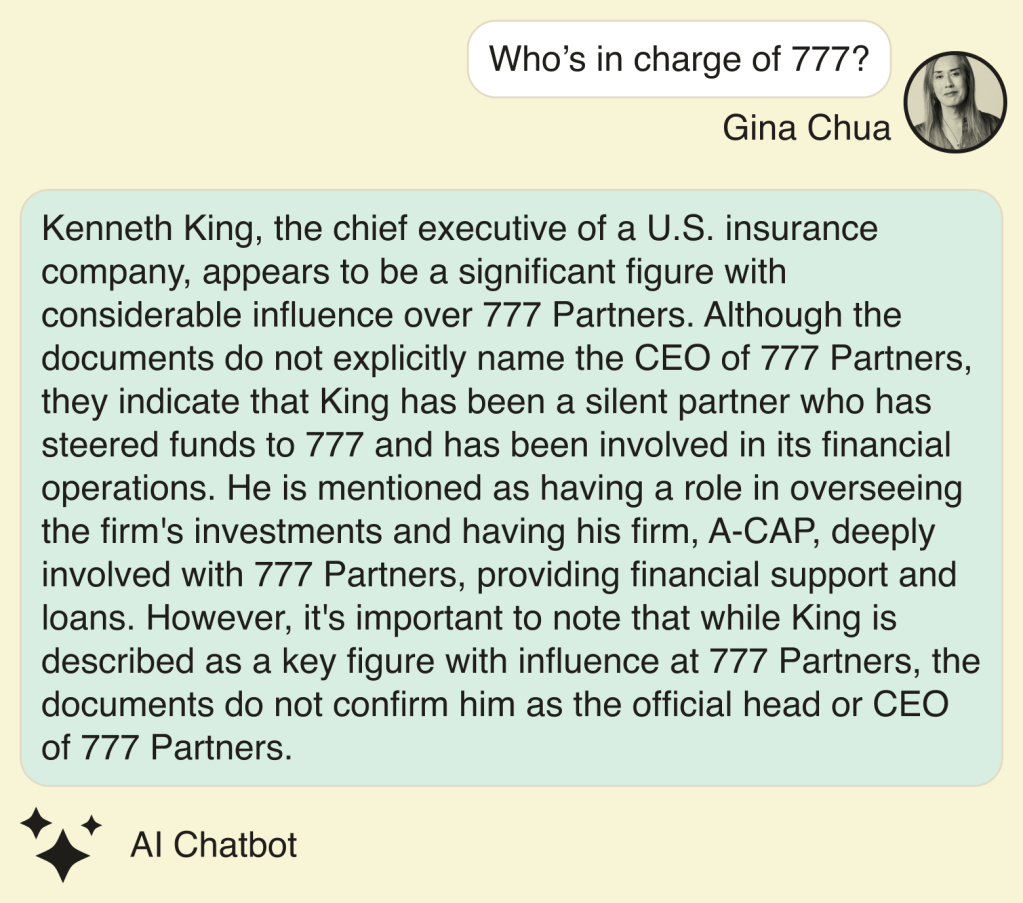

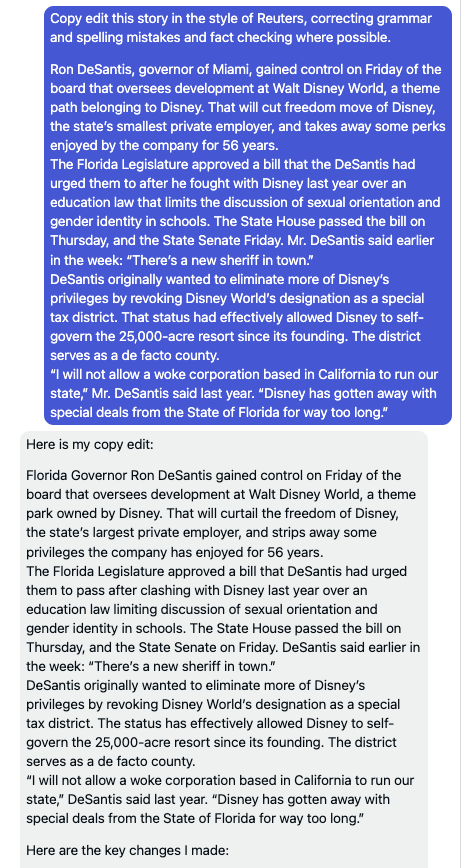

But more importantly, perhaps the most significant achievement in ChatGPT isn’t in how it comes up with answers but how it understands questions. I’ve been playing with it a bit, and what I find amazing isn’t how well – or badly – it answers my questions, but how it knows what I’m looking for. I asked it who Michael Cohen was, and it figured that out; I asked about Stormy Daniels, and it knew that too. True, not hard. But when I asked whether Donald Trump had paid Stormy Daniels off, it managed to parse what’s a really complicated question – who is Donald, who is Stormy, what is their relationship, did it involve a payoff and why, and who said what – and came back with a reasonable answer (Donald says he didn’t, but Michael said he did.)

To be sure, it’s true that as a search engine, ChatGPT has some significant drawbacks, not least that it doesn’t seem to be able to distinguish between what’s true and published and what’s untrue and published.

But Google does a pretty good job of that part of the search experience. While doing a pretty mediocre job of the front end of the search experience – we all spend far too much time refining our search terms to get it to spit out useful answers. So what if the front end of ChatGPT was paired with the back end of Google?

Imagine, as I did for a Nieman Labs prediction piece (and years ago, here), that it could be used to power a real news chatbot, but one powered by verified, real-time information from a news organization. Talk – literally – about truly personalized information, as I mused in the Nieman Lab article.

How about using ChatGPT‘s powerful language parsing and generation capabilities to turn the news experience into the old saw about news being what you tell someone over a drink at a bar? “And then what happened?” “Well, the FBI found all these documents at Mar-a-Lago that weren’t supposed to be there.” “I don’t understand — didn’t he say he declassified them?” “Actually…”

It would let readers explore questions they have and skip over information they might have. In other words, use technology to treat every reader as an individual, with slightly different levels of knowledge and levels of interest.

And yet another use case for good structured journalism! (Of course.) Regardless of how it’s ultimately used (and here’s one idea, and here’s another), it’s important to recognize how important this development is, and how it could truly transform the industry. You read it here first.

(Another digression: You should listen to this Ezra Klein episode as he talks to Sam Altman, the CEO of Open AI, which created ChatGPT. It’s either fascinating or terrifying. Or both.)